30.08.2023

Simona Todesco

Research for Genderfair Translation at Textshuttle

Gender biases in AI models are a widespread problem. They are generally trained on biased datasets and therefore tend to reproduce unintended gender stereotypes and thus societal prejudices. In automatic text translations, for example, the English terms “teacher” and “nurse” are translated into German as the generic male form “Lehrer” and generic female form “Krankenpflegerin”, respectively, although the respective other gender form would be correct.

Research

Textshuttle is taking a pioneering role in this field through its active involvement in scientific research. Chantal Amrhein, Florian Schottmann and Samuel Läubli, in collaboration with Rico Sennrich of the University of Zurich, have developed a new approach and tested the acceptance of the resuting translations. The publication was published at the ACL 2023.

Gender-fair language represents a new approach to rewriting biased text while retaining its meaning and coherence. The approach is a two-step process to de-bias biased texts. First, gender-specific terms or phrases that lead to biases in the sentence are identified. Then reformulation techniques are applied to replace those words with gender-neutral alternatives. Through the use of contextual embedding and semantic modification, the AI model ensures that the rewritten sentences retain their original meaning and context.

Currently, when using Textshuttle to produce a genderfair translation, the following steps take place: First, a translation is produced from our translation models. Then, this is reformulated into gender-fair language by a second model. Just like our translation models, the reformulation model learns from large amounts of data. During the training process, the model recognises patterns in the language that enable it to convert the relevant words in the source text into a gender-fair form.

Public acceptance

This published approach to genderfair language was then tested in terms of its acceptance by ordinary members of the public. An evaluation was conducted with 294 volunteers (141 female, 82 non-binary, 55 male, 16 other). The evaluation was based on six paragraphs selected from German-language texts that contained gender-biased words. These paragraphs were presented in three versions: in the original, in the genderfair approach, and in a manually created reference.

Participants were asked to rate the gender-fairness of the paragraphs on a scale of 1 (strongly disagree) to 5 (strongly agree). The results showed that the texts produced with the genderfair approach were rated better on average than the original texts, but worse that the texts created by humans (reference). Particularly noteworthy: Although the texts produced with the genderfair approach are not always perfect, they are nevertheless preferred by a majority of those surveyed.

Gender-fair language has not yet gained full acceptance in society as a whole and its practical implementation is extremely varied. These factors complicate the development of new approaches. Moreover, it is essential to ensure that the AI model does not itself introduce new biases or unintentionally change the original meaning of the text. The survey results demonstrate that despite its deficiencies, the new approach can help promote genderfair language.

From theory into practice

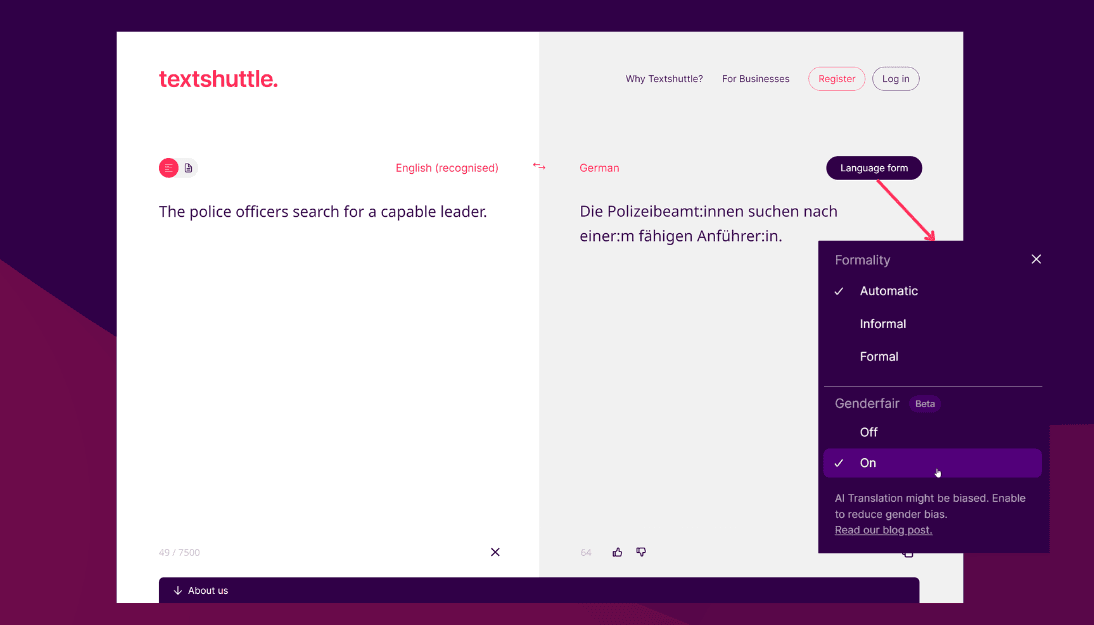

The ultimate goal of this research initiative is to put genderfair language into practice and advance the cause of inclusive language. The beta version includes an initial approach for practical adaptation, with the aim of continuous improvement. At textshuttle.com users can choose genderfair translation as the language form in the target languages German and English. Users can decide for themselves whether they want the text to be formulated in a gender-fair form. German formulations will initially offer the “gender colon” variant; in the future, the aim is to add the use of other forms, such as the “gender asterisk”, as well.

Conclusion

As a provider of AI models, we are responsible for promoting fairness and fighting prejudice. Initial feedback from users has been positive. Going forward, genderfair language will continue to play a role in the research and development of our products. Feedback from our users drives our continuous improvement. The beta version is available for use now at textshuttle.com. We very much welcome suggestions and feedback.

More information

Want to find out more about Textshuttle, or looking for experts in AI? Visit our Media Corner. Here, you’ll find the press release on the launch of Textshuttle. Textshuttle’s business solution is used by thousands of employees and dozens of professional translation teams in Swiss and multinational corporations such as Swiss Life, Migros Bank and OBI Group.